28+ calculate hinge loss python

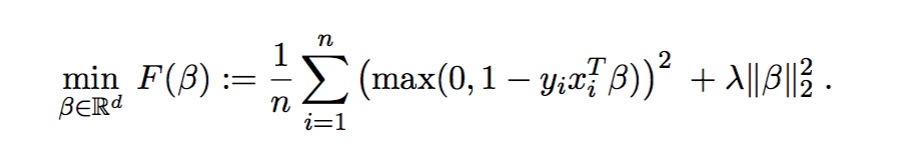

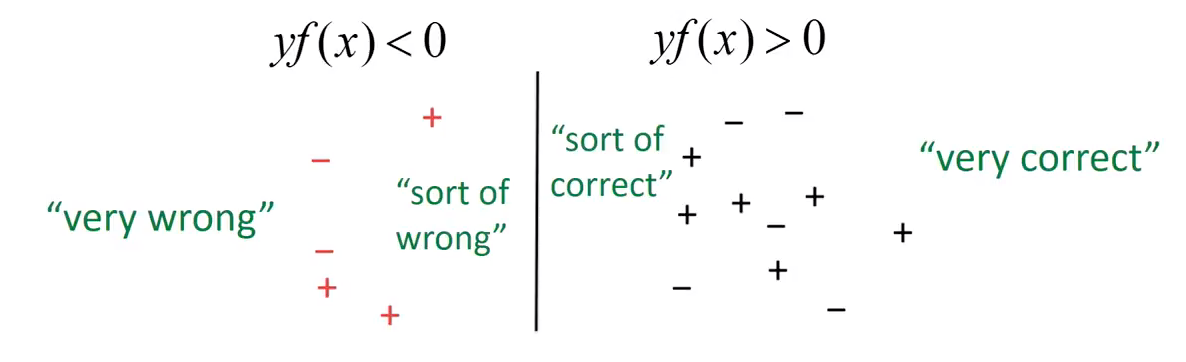

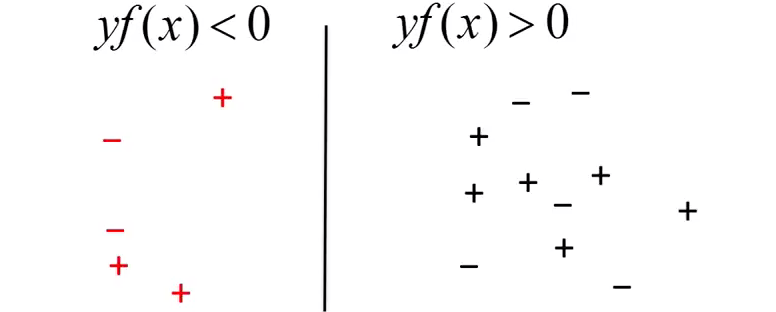

Y_true values are expected to be -1 or 1. Web Where hinge loss is defined as max 0 1-v and v is the decision boundary of the SVM classifier.

Github Gmoog Svm Implement Linear Svm Using Squared Hinge Loss In Python

LossH max 0 1.

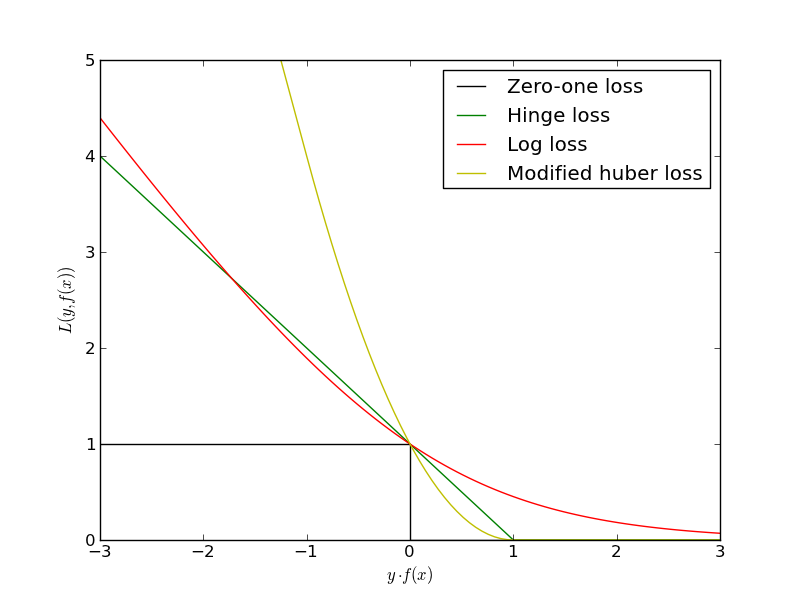

. Web Hinge loss function is given by. It is used when we want to make real-time decisions with. Web Hinge Loss simplifies the mathematics for SVM while maximizing the loss as compared to Log-Loss.

Loss H max01-Yy Where Y is the Label and. γ is the change in slope after the hinge. More can be found on the Hinge Loss Wikipedia.

As for your equation. The hinge loss function is given by. Web import numpy as np from sklearnmetrics import hinge_loss def hinge_funactual predicted.

This is the general Hinge Loss function and in this tutorial we are. Web A Hinge Loss is a loss function used to train classifiers in Machine Learning. Web In python we can do that by creating a vector containing the sum of the column.

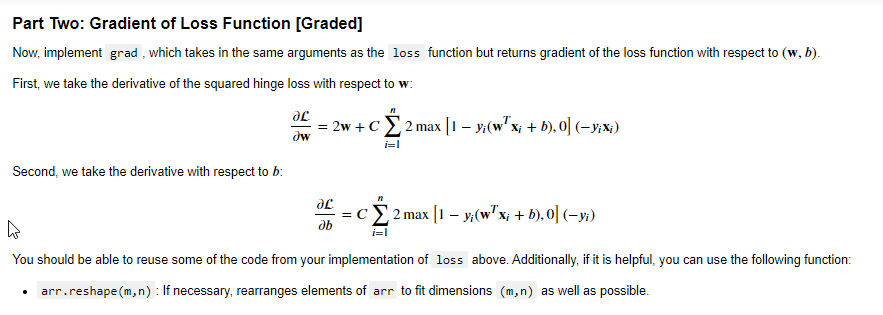

Web The hinge loss is a maximum margin classification loss function and a major part of the SVM algorithm. Web Im computing thousands of gradients and would like to vectorize the computations in Python. Web Lets look at how to implement these loss functions in Python.

If binary 0 or 1 labels are provided we will convert them to -1 or. Web The A hinge function can be expressed as y i γ max x i θ 0 ε i where. For an intended output t 1 and a classifier y the hinge loss of the prediction is.

Web Computes the squared hinge metric between y_true and y_pred. The context is SVM and the loss function is Hinge Loss. Np_sup_zero npsummask axis1 then we need to replace the y i th column.

In your example this amounts to the. Replacing 0 -1 new_predicted nparray-1 if i0 else i. Web Hinge Embedding loss is used for calculating the losses when the input tensorx and a label tensory values are between 1 and -1 Hinge embedding is a good.

Mean Square Error MSE Mean square error MSE is calculated as the average of the square.

Github Tejasmhos Linear Svm Using Squared Hinge Loss This Is An Implementation From Scratch Of The Linear Svm Using Squared Hinge Loss

A Modified Drift Tube Ion Mobility Mass Spectrometer For Charge Multiplexed Collision Induced Unfolding Analytical Chemistry

List Of Empanelled Organisations 2021 Pdf World Wide Web Internet Web

Github Tejasmhos Linear Svm Using Squared Hinge Loss This Is An Implementation From Scratch Of The Linear Svm Using Squared Hinge Loss

Understanding Loss Functions Hinge Loss By Kunal Chowdhury Analytics Vidhya Medium

Hinge Loss Gradient Computation Victor Busa Machine Learning Enthusiast

Python Hinge Loss Function Gradient W R T Input Prediction Stack Overflow

Seven Days March 2 2011 By Seven Days Issuu

Solved Linear Svm Recall That The Unconstrained Loss Chegg Com

The B Lactamase Gene Regulator Ampr Is A Tetramer That Recognizes And Binds The D Ala D Ala Motif Of Its Repressor Udp N Acetylmuramic Acid Murnac Pentapeptide Sciencedirect

Neural Networks How Do I Calculate The Gradient Of The Hinge Loss Function Artificial Intelligence Stack Exchange

Hinge Loss For Binary Classifiers Youtube

Machine Learning Gradient Descent On Hinge Loss Svm Python Implmentation Stack Overflow

Understanding Loss Functions Hinge Loss By Kunal Chowdhury Analytics Vidhya Medium

Double Hinge Loss Function For Positive Examples With P 0 4 And P Download Scientific Diagram

The B Lactamase Gene Regulator Ampr Is A Tetramer That Recognizes And Binds The D Ala D Ala Motif Of Its Repressor Udp N Acetylmuramic Acid Murnac Pentapeptide Sciencedirect

Sgd Convex Loss Functions Scikit Learn 0 11 Git Documentation